Building a Gold Standard for NZZ OCR Quality Assessment

Getting equipped with data feels like Christmas for a digital humanist (this sentence actually got me thinking about the name of my blog and if it would be better to change it to "lifeofadigitalhumanist" :-), but naah!). And so I was quite happy when we received a 6TB external hard drive with all the

NZZ data from 1780 to 2017 on it. Within then

impresso project (see

www.impresso-project.ch) we work with texts until 1950, which in case of the NZZ still amounts to 2TB of PDFs full of text an image data.

|

| The external hard drive containing the NZZ newspapers. |

So, the work of text mining 170 years of a historical newspaper could begin. Or so we thought. We realised very quickly that the OCRed text the NZZ so kindly delivered was not nearly as good as we had hoped for. Also, the quality of the images leaves much to be desired. This has mainly two reasons: for one, as the NZZ approached its 225 year jubilee in 2005, it decided to have all its texts digitised. In order to save time (and probably also money), the NZZ had its text digitised from microfilm, resulting in low quality images, like the one below.

|

| Low quality image from NZZ with text from other page shining through. |

In order to achieve good OCR results, most software expects images digitised from paper with at least 300dpi. Such a two stage digitisation as carried out by the NZZ does not guarantee this image quality and thus results in pretty bad source material for the actual OCR. Secondly, we have been informed that the NZZ distributed the material to several institutions to speed up the digitisation. This resulted in a lack of control over the whole process and thus the final product looks very inconsistent. Moreover, we can now only guess what could have gone wrong during the digitisation when we encounter very bad OCR results. Let's have a look at the text from the page above:

|

| OCR result from 1811 |

We see that we hardly get a sentence right, and often, there is not a single correctly recognised word in a line. It goes without saying that even with automatic post-correction of OCR, there is not much to do. And since our aim is to apply text mining techniques on this data, it becomes evident that we will not be able to achieve much on this kind of data. Still, the motto is "garbage in, garbage out", and this is super-garbage.

So, we decided to tackle the problem and produce a ground truth in order to be able to measure accurately how horrible the situation is. For gold standard creation, we used (or better, are using) the

Transkribus framework. Originally concipated as a tool for handwriting recognition, it also assists in quick an easy transcription of historical printed documents.

|

| Transkribus interface for ground truth creation |

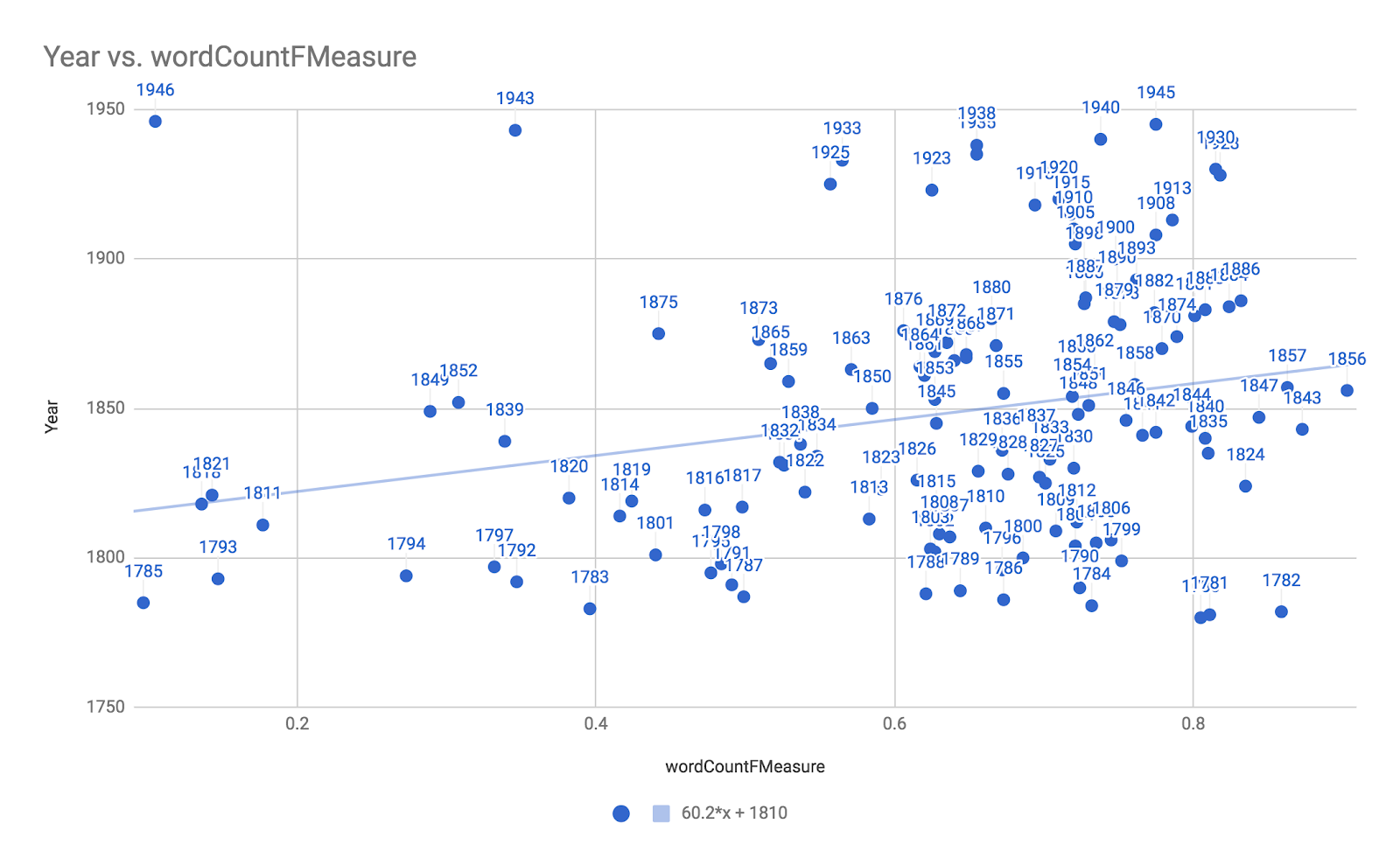

Generally, the recognition of black letter is more challenging than antiqua. And since the NZZ published in black letter until 1947, we decided to forgo the creation of a gold standard for the 3 years antiqua until 1950, at least for the NZZ. Hence, we randomly chose a front page for each year from 1780 to 1947, which amounts to a total of 167 front pages to be transcribed. So far, we transcribed 130 pages. This amounts to a total of about 300,000 words. Aside from some fixes in the layout (the Transkribus software recognises baselines, lines, words, paragraphs, and separators, among others), we transcribe on the word level, join or split letters or words where appropriate and add them if the software has not recognised them. Although this being a very time consuming task, perliminary results already show stark contrasts between OCR versions. When we compare the OCR that came with the NZZ against the OCR that is carried out in Transkribus using

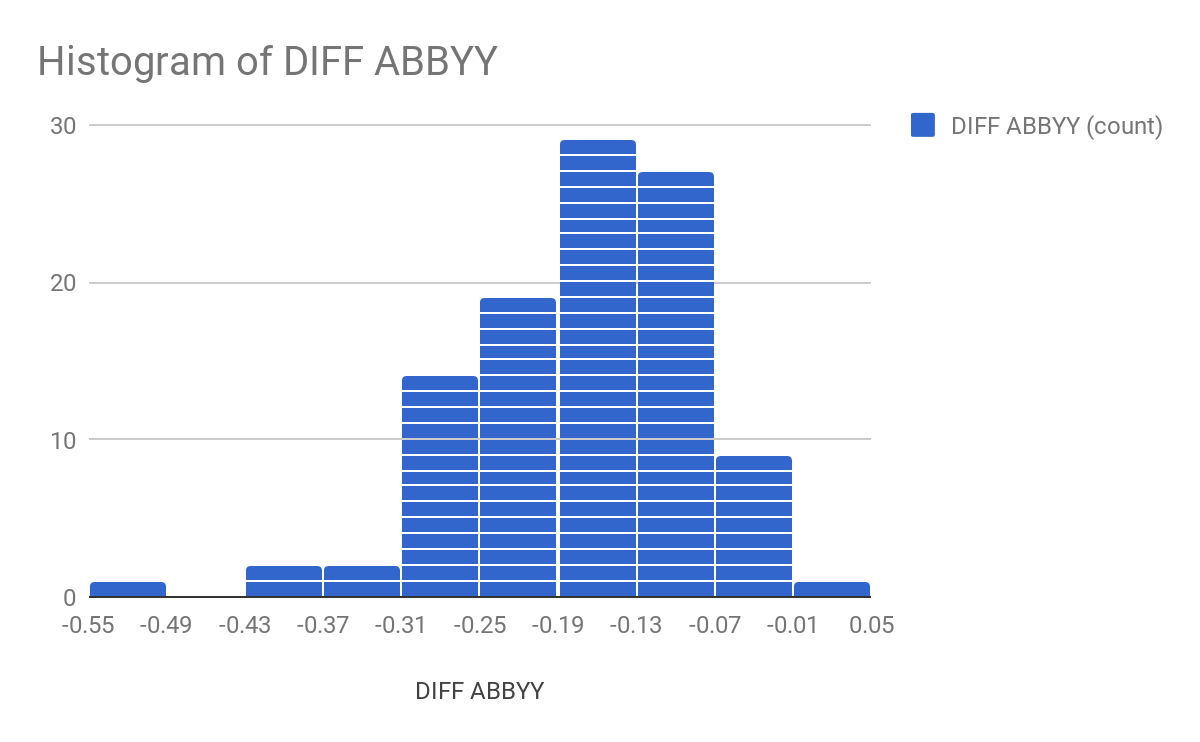

ABBYY Finereader, we see that already simple re-OCRing improves the text quality by about 17% (F1 score). We used bag-of-words evaluation, since this measure is robust against text zone orders.

|

OCR quality delivered by NZZ

|

|

| OCR quality after re-OCRing using ABBY Finereader in Transkribus |

|

| Histogram of DIFF ABBY Finreader vs. NZZ-OCR |

In February 2018, we presented these results to the NZZ. They had already suspected such "bad news". They saw the problem and they understood that all future work we do on their data will be severely sabotaged by the bad OCR. This led the responsibles at NZZ to consider an extensive re-digitisation of their material, using the paper sources instead of microfilm. As next steps, we are thus looking for ways how to raise funds to finance this undertaking. Yet another positive effect we could achieve through the work with the NZZ data is that the NZZ now provides their data from 1780 to 1950 for scientific purposes, which results in an easing of the NDA we signed with them (they were the most strict project partner so far, only providing their data for project internal use). In this respect, the

impresso project already had some impact :-).

But back to the gold standard. Given enough material, Transkribus also allows you to train your own Handwritten Text Recognition (HTR) models, using recurrent neural networks (RNNs). Our material is not yet fit for this training, since the baselines need to be adjusted (the kind Transkribus team offered to help us out here, thanks!). However, there are other models which have been trained on black letter, e.g., a model trained on the

Wiener Diarium (

Wiener Zeitung). This newspaper is the main focus of the

Diarium project, which seeks to provide the infrastructure to make large scale annotations in the

Wiener Diarium.

Günter Mühlberger from Transkribus quickly applied the Diarium model on our data, which again showed a significant improvement over the ABBY Finereader (below 2% Character Error Rate). Our hope now lies on a model trained on our data to improve this value and get below 1% CER. A joint model learned on the Diarium and on our data could also provide even better results.

In retrospective, the creation of a gold standard was absolutely necessary. Framworks like Transkribus help us a lot in this undertaking and now, with a NZZ-specific recognition model in sight, having a gold standard seems to pay off. Not only are we able to assess the quality of OCR output, but we can use the material to train models which do better than commercial software! Moreover, our work with the NZZ material led to an easing of the NDA with the NZZ and to a probable re-digitisation, which would lead to high quality images and thus even better OCR output. We look forward to further work on the NZZ texts :-).

Comments

Post a Comment